Website Data Extraction/Scraping & Form Filling Expert

Web scraping can be hugely advantageous to businesses, allowing them to function more effectively and keep up-to-date with information that is on specific websites more frequently and accurately.

Web scraping can be hugely advantageous to businesses, allowing them to function more effectively and keep up-to-date with information that is on specific websites more frequently and accurately.

This is especially true when you consider the applications that can be created can be run by numerous members of staff on an ad-hoc basis or even automated everyday at certain times or that they allow the access to complex data from suppliers for more effective merchandising or keeping their internal systems updated more frequently with stock and pricing information.

This can be also a very quick process too, taking only a few hours to complete most projects and then only taking just seconds for small projects to run and depending on the complexity & speed of the users connection to the internet.

I have several years experience with web scraping over many projects and requirements. In this article I cover the details of extraction in more depth and include examples where suitable. If you have a project in-mind, contact me today with your requirements.

I specialise on small & medium scale scraping projects, such as extracting data from supplier websites for product information, stock & prices updates.

However I can readily tackle multi-tiered extractions and also create clean data from complex situations to import into 3rd party applications with little to no input from the user.

Not only can most data be extracted from most websites, the data can also be posted to websites from data files such as CSV. This could be form filling for job applications, listing products on to websites or online dating requests, not just extracting product, service or article data from a website.

If you can do it in a web browser, then it can most likely it can be automated.

The possibilities are almost endless.

![]() If have a project in mind, Contact Matthew today, it could be completed in just a few hours.

If have a project in mind, Contact Matthew today, it could be completed in just a few hours.

Getting The Edge With Data Extraction

Using automated tools to grab or post data to the web could trim hours off each day or week. Extracting the latest stock & prices from suppliers could mean higher profitability and less back-orders. It could even mean reams of data from suppliers websites and give your business the edge over your competitors.

It doesn’t matter if its behind password protected content, if you can “see it” in your web browser, chances are it can be extracted. If you’re entering data manually into website forms, chances are high that it can be automated too.

I’ve worked on numerous projects where clients have been able to ensure that they’re back-office tools are up to date as possible with the latest information from suppliers and even allowed businesses to work with suppliers they’ve never been able to do with before, because the requirements to extract data from supplier websites has been too restrictive either due to time or cost.

Knowing what your competitors prices are can be a huge advantage when it comes to pricing especially in the eCommerce environment we have today. If you’ve got the data and they can be matched to other sites, within one click and a few minutes, the latest pricing information from competitors could be yours. As many times as you want, whenever you want.

Scraping & data extraction can solve this in a cost effective manner. One script, used over and over. Anytime you want by however members of staff you have.

![]() If you want the edge, Contact Matthew today.

If you want the edge, Contact Matthew today.

The Required Tools Are Free

Using two free applications, the first is the Firefox web browser and a free add-on called iMacros, simple to very complex web automation can be completed.

This allows completed projects to be run by the owner using free-to-use tools, so that any extraction or processing can be run by the owner or staff members as many times as they require and however often they require.

Also extract processing can be obtained using JavaScript to process complex data inputs or extracted data from websites. I cover this in more detail in the “extra data processing” section.

Don’t worry if you’ve never used either of these before, if you’ve used a web browser and can press a button, its that simple. I’ll help you get started and its very easy to do. I also include instructional video’s to get you set up. It’ll take no more than 10 minutes.

Simple Extraction

In this scenario, data elements from a single page can be extracted and then saved to a CSV file.

Example:

This could be a product detail page of a TV and the required elements, such as:

- Product title

- Price(s)

- Stock number

- Model number

- Images

- Product specifics

- Descriptions

- Reviews

Are all extracted and then are then saved to a CSV file for your own use.

The time it takes to make a simple extraction of data from a single page varies greatly, this is because the data on the page can sometimes be very poorly formatted or if there are lots of fields that need to be extracted this can take quite some time.

![]() If have a project in mind, Contact Matthew today, it could be completed in just a few hours.

If have a project in mind, Contact Matthew today, it could be completed in just a few hours.

Extra Data Processing

Extra processing can be applied to the extracted data before saving to a CSV file. This is very handy when you only want or require cleaned data to be saved. Most of the time its obvious that cleaning is needed and basic cleaning of the data is included in the macro.

The quickest way of identifying any processing you require on extracted data is to provide an example file of how you would like the final data to look like.

Example:

If one of the extracted fields was a complex data field such as and email address held with other data in JavaScript, such as this:

<script language=”javascript” type=”text/javascript”>var contactInfoFirstName = “Vivian”; var contactInfoLastName = “Smith”; var contactInfoCompanyName = ” REALTY LLC”; var contactInfoEmail = “[email protected]”; </script>

Instead of including the extra information in the export, the email address can be identified and only that data field is extracted. Or if all the data held in the JavaScript is required, this could be split into separate columns, such as:

First Name, Last Name, Company Name, Email Address

Vivan, Smith, REALTY LLC, [email protected]

Also, if the data needs to be formatted for import into a 3rd party application, such as ChannelAdvisor, eSellerPro, Linnworks or a website application, this isn’t a problem either. I’m exceptionally competent with Microsoft Excel & VBA and can help you leverage the gained data and format it in a complete solution that requires the least amount of input from you or your staff.

![]() Even if you have basic requirements or highly complex Contact Matthew today, your data extraction project could be completed in just a few hours and fully customised to your business requirements.

Even if you have basic requirements or highly complex Contact Matthew today, your data extraction project could be completed in just a few hours and fully customised to your business requirements.

Paginated Extraction

This can vary from site to site, however complex extraction could involve navigating several product pages on a website such as search results, then navigating to each product that is in the search result and then processing a simple extraction or a complex extraction on the products detail page.

Example (property) – Website: Homepath.com

In this example, not only is the requirement is to extract the data found for a specific property; it is also required for ALL the search results to be extracted.

This would involve extracting all the results and then navigating to each property page and extracting the data on the property detail pages.

The time taken to extract the data from such pages varies on both the number of property results to go through and the amount of data that is to be extracted from the property details page.

Example (products) – Website: Microdirect.co.uk

In this example similar to the properties, the requirement is to extract the data from each of the product pages, but to also to extract the product details pages data for all the pages in the search results.

The macro would navigate through each of the page results (say 10), identify each of the products, then one-by-one work its way through the products, saving the data to a file.

![]() Need data from pages & pages of a website? Not a problem, Contact Matthew today, it could be completed in just a few hours.

Need data from pages & pages of a website? Not a problem, Contact Matthew today, it could be completed in just a few hours.

Ultra Complex Extraction

These normally consist of a requirement of data to be processed from a CSV file, then external processing & scraping by the macro and then possibly depending upon the results, further processing or scraping is to be completed. Such projects are normally very complex and can take some time to complete.

Working with multiple tiered drop down boxes (options) fall into this category, as normally by their very nature can be complex to deal with. It’s also worth noting that is possible to work with multiple tiers of options, for example, when making one section, the results cause sub-options to appear. Sites that need image recognition technologies also fall into this category.

However it’s easier to explain an example rather go minute detail.

Example

For this example, you have a CSV file that has a number of terms that need to be searched for on a dating website, once these searches are made, the details are saved and then it is required to contact/email of the persons through another form.

The macro will make intelligent searches for these terms and the matching results (these are likely to be paginated) are saved to a separate file. Then for each result that was saved, the macro will then are then sent customised contact messages through another form found on the same or different website.

![]() Do you feel your requirements are complicated or the website you’d like to extract from or post to isn’t simple? Contact Matthew today, I’ll be able to let you know exact times & can create the project for you at a fixed cost.

Do you feel your requirements are complicated or the website you’d like to extract from or post to isn’t simple? Contact Matthew today, I’ll be able to let you know exact times & can create the project for you at a fixed cost.

Saving Data & File Types

Extracted data is normally saved as CSV files. The data is separated by commas “,” and will open in Microsoft Excel or Open Office easily. For most applications using a comma will work perfectly.

However sometimes, the data that is extracted is complex (such as raw HTML) and using a comma as the separator causes issues with leakage when viewing in Microsoft Excel or Open Office, this is when using other characters such as the pipe “|” comes in very handy to separate the data fields (eg title and image).

The separator can be any single combination of characters you wish, some common examples are:

- Comma “,”

- Tab ” “

- Pipe “|”

- Double pipe “||”

- Semi-colon “;”

- Double semi-colon “;;”

It will be quite clear from the onset which separator is required either from the data is being extracted or the projects requirements. If you have any special requirements, please discuss this beforehand.

XML or SQL insert statements can also be created if desired, however this can add several hours onto projects due to its complexities.

![]() File types an issue? I can pre-process data files before-hand in other applications id needed. Contact Matthew today, it could be completed in just a few hours.

File types an issue? I can pre-process data files before-hand in other applications id needed. Contact Matthew today, it could be completed in just a few hours.

Speed of Extraction/Form Filling

As a general rule, the projects I create run exceptionally fast, however there are two factors that will limit the speed of them:

- The speed of the website being interacted with

- The speed of your connection to the internet

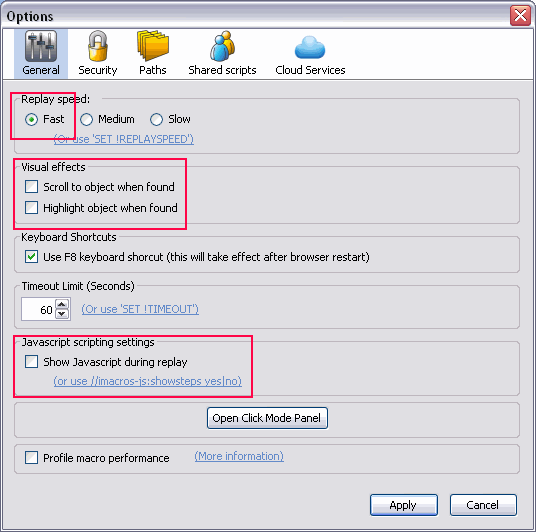

You can also make project scripts run much faster by ensuring that the following options in your iMacro’s settings are turned exactly the same as those shown below.

You can find the options page shown below by clicking the “Edit” tab on the iMacro’s side bar, then pressing the button called “Options”.

![]() Even if you above looks complicated, its not. Instructional video’s are included and I’ll make it exceptionally easy for you. Contact Matthew today, it could be completed in just a few hours.

Even if you above looks complicated, its not. Instructional video’s are included and I’ll make it exceptionally easy for you. Contact Matthew today, it could be completed in just a few hours.

Exceptions & “Un-Scrape-able” Pages

It is important that your processing requirements are discussed before hand with examples, so that I can confirm whether or not automated scraping will suit your requirements. In most cases it will do, but sometimes it’s just not possible.

In some cases, it is not possible to extract data from pages over & over due to:

- A poor ‘make up’ of the page

- Inconsistent page layouts

- Page structures that vary enormously from one page to another

- Use of flash or excessive use of AJAX

- User code ‘capture’ boxes (like recapture)

When this happens, then the only consistent method of extracting data from such pages is by a human and scraping will unlikely be suitable for your requirements. This is rare, but does occur. If I identify this (and I will very quickly), I’ll let you know ASAP.

I am unwilling to work with data of questionable content. The below above are just common-sense really, I’ve added them for completeness.

- Adult orientated material (porn is a no, services are a no, ‘products’ are ok)

- Sites that are focused towards children

- Identifiable records on people such as medical records (order related data is fine if they are yours).

- Most government sites

- In situations where I suspect the data will be grossly miss-used for fraudulent or illegal purposes.

![]() Unsure on what your requirements are or just not sure if web scraping is the right way forwards for your business requirements. Contact Matthew now, I’ll be able to help you and turn it into plain English for you.

Unsure on what your requirements are or just not sure if web scraping is the right way forwards for your business requirements. Contact Matthew now, I’ll be able to help you and turn it into plain English for you.

What Are the Limits of Extraction/Processing?

Most normal limitations are caused by very large or very deep page requirements of a project. That doesn’t mean they’re not possible, just that it could take some time to code for and also for to run each time by you.

The projects that I create suit smaller scale situations, such as one off extractions or extractions that need to be run by the owner over and over, such as on a daily basis to collect the latest product & pricing information from a supplier.

The real limitations come in to force when the requirements are for huge scale extraction, such as hundreds of thousands of records or exceptionally complex and exceptionally deep extractions. This is when using tools such as Pyhon , C++, Perl or other languages that allow spidering of websites would be more suitable.

![]() This is not a speciality of mine, however due to my experience with scraping, I can assist you with project management of such projects with 3rd party contractors. Contact Matthew now if this is what you need.

This is not a speciality of mine, however due to my experience with scraping, I can assist you with project management of such projects with 3rd party contractors. Contact Matthew now if this is what you need.

Anonymity & Use of Proxies

If you need to keep the activities of such scripting hidden to remain anonymous, then this can be achieved on small scale projects using free proxies with no interaction from yourself.

In larger or more repetitive situations then either I can help you setup your browser to use free to use proxies (can be unreliable at times) or in most cases I’ve found leveraging inexpensive a services that are very easy to use and most importantly reliable.

![]() If this is a concern for you, don’t worry I’ve done it all before. Contact Matthew now if this is a requirement for your project.

If this is a concern for you, don’t worry I’ve done it all before. Contact Matthew now if this is a requirement for your project.

Do you provide ‘open’ code?

For ‘small’ or ‘simple’ macros, yes the code is open and you or your development team are able to edit as required.

However for some complex or ultra complex macro’s the code is obfuscated due to the extra functions that are normally included. This is non-negotiable as I have developed custom functions that allow me to uniquely deal with complex situations of data extraction & posting.

Is Web Scraping Legal?

The answer to this can be both yes and no depending upon the circumstances.

Legal Data Extraction

For example if you own the site and data that is being extracted, then you own the data and you’re using it for your own purposes. If you gain permission beforehand, for example from a supplier to extract data from their website, this is also legal.

I have worked on projects where an employee has left a company and there is no access to the back-ends/administration consoles of websites and the only way of obtaining the data held on the site is by scraping. I’ve done BuddyPress, WordPress, PHP-Nuke, phpBB, e107 & vBulletin sites previously to name just a few.

Also I have completed many projects where product data is extracted for use by a business to obtain up-to-date pricing and stock information from suppliers websites, along with extra product & categorisation data too.

Illegal Data Extraction

Because the macro’s are run on your or staff computers, scenarios outside of where the sites are owned or permission has been granted, fall into your discretion.

I cannot be held responsible for any legal actions that may proceed from your actions of running such scripts on 3rd party websites. As such I strongly recommended that you contact the 3rd party to seek consent and check any privacy or usage policies that they may have prior to extraction.

Contact Matthew

If you’ve got a clear idea on what you’d like done or just even if you’re just not sure if its even possible, Contact Matthew today and I’ll be able to tell you if it is possible, how long, how much and when the completed project will be with you.